Matt has taken the bait and asked me a five good questions about my snarky, contrarian post on climate adaptation. Here are his questions and my answers.

Question 1. This paper will be published soon by the JPE. Costinot, Arnaud, Dave Donaldson, and Cory B. Smith. Evolving comparative advantage and the impact of climate change in agricultural markets: Evidence from 1.7 million fields around the world. No. w20079. National Bureau of Economic Research, 2014. http://www10.iadb.org/intal/intalcdi/PE/2014/14183.pdf

It strongly suggests that adaptation will play a key role protecting us. Which parts of their argument do you reject and why?

Answer: This looks like a solid paper, much more serious than the average paper I get to review, and I have not yet studied it. I’m slow, so it would take me awhile to unpack all the details and study the data and model. Although, from a quick look, I think there are a couple points I can make right now.

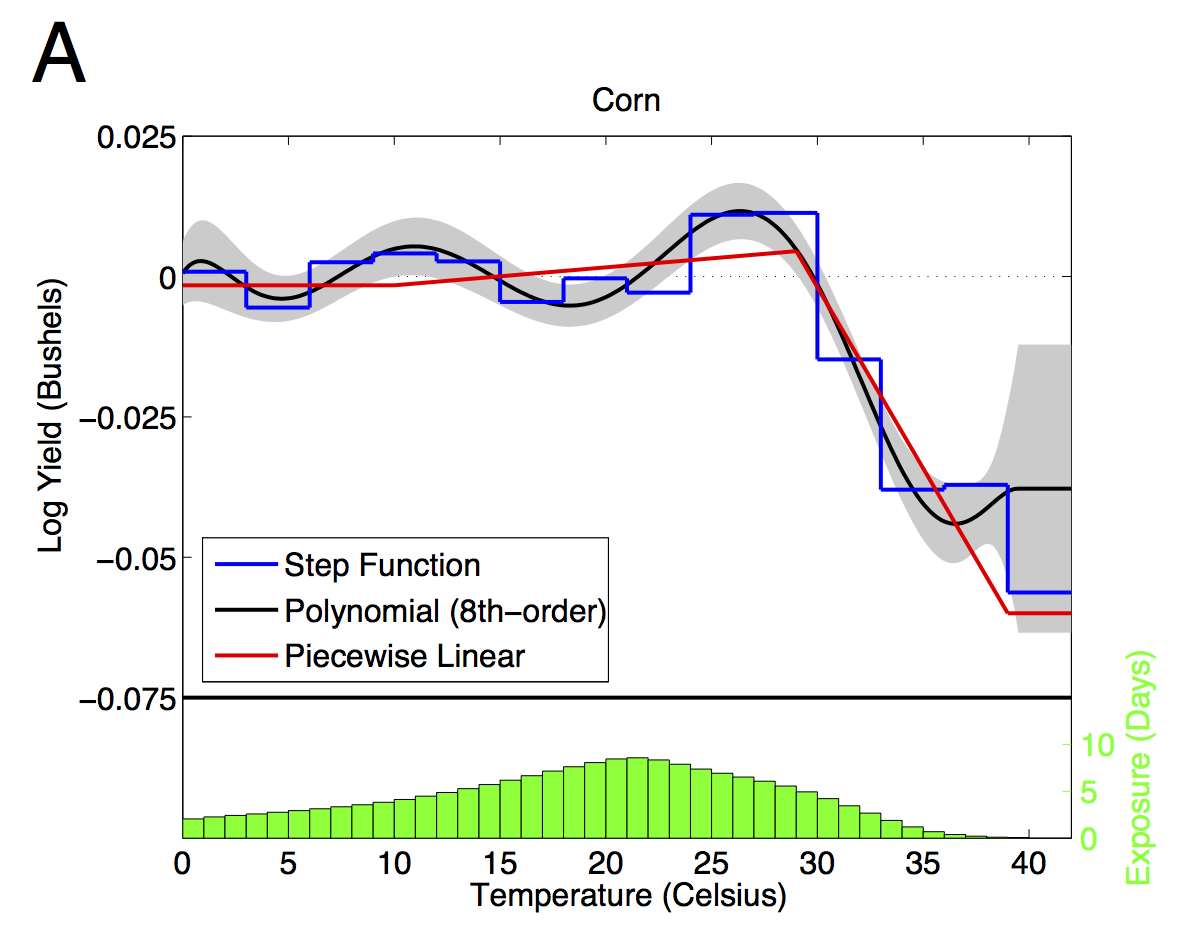

First, and most importantly, I think we need to be clear about the differences between (i) adaptation (ii) price response and trade; (iii) innovation that would happen anyway; (iv) climate-change-induced innovation; and (v) price-induced innovation. I’m pretty sure this paper is mainly about (ii), not about adaptation as conventionally defined within literature, although there appears to be some adaptation too. I need to study this much more to get a sense of the different magnitudes of elasticities they estimate, and whether I think they are plausible given the data.

To be clear: I think adaptation, as conventionally defined, pertains to changing production behavior when changing climate while holding all other factors (like prices, trade, technology, etc.) constant. My annoyance is chiefly that people are mixing up concepts. My second annoyance is that too many are perpetually optimistic--some economists wear it like a badge, and I don’t think evidence or history necessarily backs up that optimism.

Question 2. If farmers know that they face uncertain risks due to climate change, what portfolio choices can they engage in to reduce the variability of their earnings? What futures markets exist to allow for hedging? If a risk averse economic agent knows "that he does not know" what ambiguous risks she faces, she will invest in options to protect herself. Does your empirical work capture this medium term investment rational plan? Or do you embrace the Berkeley behavioral view of economic agents as myopic?

Some farmers have subsidized crop insurance (nearly all in the U.S. do). But I don't think insurance much affects production choices at all. Futures markets seem to “work” pretty well and could be influenced by anticipated climate change. We actually use a full-blown rational expectations model to estimate how much they might be affected by anticipated climate change right now: about 2% higher than they otherwise would be.

Do I think people are myopic? Very often, yes. Do I think markets are myopic? By and large, no, but maybe sometimes. I believe less in bubbles than Robert Shiller, even though I'm a great admirer of his work. Especially for commodity markets (if not the marcoeconomy) I think rational expectations models are a good baseline for thinking about commodity prices, very much including food commodity prices. And I think rational expectations models can have other useful purposes, too. I actually do think the Lucas enterprise has created some useful tools, even if I find the RBC center of macro more than a bit delusional.

I think climate and anticipated climate change will affect output (for good and bad), which will affect prices, and that prices will affect what farmers plant, where they plant it, and trade. But none of this, I would argue, is what economists conventionally refer to as adaptation. A little more on response to prices below...

Again, my beef with the field right now is that we are too blase about miracle of adaptation. It’s easy to tell horror stories that the data cannot refute. Much of economist tribe won’t look there—it feels taboo. JPE won’t publish such an article. We have blinders on when uncertainty is our greatest enemy.

Question 3. If specific farmers at specific locations suffer, why won't farming move to a new area with a new comparative advantage? How has your work made progress on the "extensive margin" of where we grow our food in the future?

The vast majority of arable land is already cropped. That which isn’t is in extremely remote and/or politically difficult parts of Africa. Yes, there will be substitution and shifting of land. But these shifts will come about because of climate-induced changes in productivity. In other words, first-order intensive margin effects will drive second-order extensive margin effects. The second order effects—some land will move into production, some out--will roughly amount to zero. That’s what the envelope theorem says. To a first approximation, adaptation in response to climate change will have zero aggregate effect, not just with respect to crop choice, but with respect to other management decisions as well. I think Nordhaus himself made this point a long time ago.

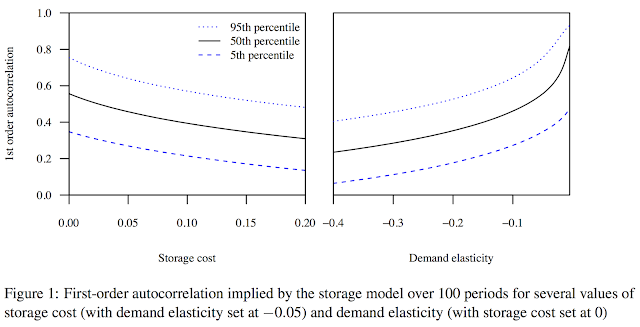

However, there will also be intensive and extensive margin responses to prices. Those will be larger than zero. But I think the stylized facts about commodity prices ( from the rational expectations commodity price model, plus other evidence ) tell us that supply and demand are extremely inelastic.

Question 4. The key agriculture issue in adapting to climate change appears to be reducing international trade barriers and improving storage and reducing geographic trade costs. Are you pessimistic on each of these margins? Container ships and refrigeration keep getting better, don't they?

I think storage will improve, because almost anyone can do it, and there’s a healthy profit motive. It’s a great diversification strategy for deep-pocketed investors. I think many are already into this game and more will get into it soon. Greater storage should quell a good share of the greater volatility, but it actually causes average prices to rise, because there will be more spoilage. But I’m very “optimistic” if you will, about the storage response. I worry some that the storage response will be too great.

But I’m pretty agnostic to pessimistic about everything else. Look what happened in earlier food price spikes. Many countries started banning exports. It created chaos and a huge “bubble” (not sure if it was truly rational or not) in rice prices. Wheat prices, particularly in the Middle East, shot up much more than world prices because government could no longer retain the subsidized floors. As times get tougher, I worry that politics and conflict could turn crazy. It’s the crazy that scares me. We’ve had a taste of this, no? The Middle East looks much less stable post food price spikes than before. I don’t know how much food prices are too blame, but I think they are a plausible causal factor.

Question 5. With regards to my Climatopolis work, recall that my focus is the urbanized world. The majority of the world already live in cities and cities have a comparative advantage in adapting to climate conditions due to air conditioning, higher income and public goods investments to increase safety.

To be fair: I’m probably picking on the wrong straw man. What’s bothering me these days has much less to do with your book and more to do with the papers that come across my desk every day. I think people are being sloppy and a bit closed minded, and yes, perhaps even tribal. I would agree that adaptation in rich countries is easier. Max Auffhammer has a nice new working paper looking at air conditioning in California, and how people will use air conditioning more, and people in some areas will install air conditioners that don’t currently have them--that's adaptation. This kind of adaptation will surely happen, is surely good for people but bad for energy conservation. It’s a really neat study backed by billions of billing records. But the adaptation number—an upper bound estimate—is small.

I thought of you and your book because people at AAEA were making the some of the same arguments you made, and because you’re much bigger fish than most of the folks in my little pond. Also, I think your book embodies many economists’ perhaps correct, but perhaps gravely naïve, what-me-worry attitude.